Machine learning (ML) is revolutionizing industries by enabling computers to learn from data and make intelligent decisions. However, at the core of ML lies statistics, which provides the fundamental tools to understand, analyze, and interpret data effectively. Without statistical knowledge, building reliable and accurate ML models becomes challenging.

Whether you’re working on predictive analytics, AI-driven automation, or deep learning applications, a strong foundation in statistics is essential. Let’s explore the key reasons why statistics plays a crucial role in machine learning.

1. Understanding Data

Before applying ML algorithms, data scientists need to explore and understand data. Descriptive statistics, including measures like mean, median, mode, variance, and standard deviation, help summarize data and identify patterns.

By leveraging statistical techniques, you can detect outliers, missing values, and data distribution, ensuring that your data is well-prepared for training ML models. A poor understanding of data can lead to biased models and incorrect predictions.

2. Probability Theory in ML

Many machine learning models are built upon probability theory, which helps in modeling uncertainty and making probabilistic predictions. Algorithms such as Naïve Bayes, Hidden Markov Models, and Bayesian Networks heavily rely on probability.

Key probability concepts used in ML include:

✅ Conditional probability – Understanding dependencies between variables.

✅ Probability distributions – Normal, Poisson, Bernoulli, and more help model real-world scenarios.

✅ Bayes’ Theorem – Fundamental for Bayesian ML models and decision-making.

Understanding probability allows ML engineers to make more accurate predictions in real-world applications, such as fraud detection, spam filtering, and medical diagnosis.

3. Hypothesis Testing & Decision Making

ML models are built to make predictions and draw conclusions, but how do we know if a model’s predictions are statistically valid? This is where hypothesis testing plays a critical role.

Statistical techniques such as t-tests, p-values, confidence intervals, and ANOVA (Analysis of Variance) help validate ML models by determining whether the results are statistically significant or just a coincidence.

For example, in A/B testing, businesses use hypothesis testing to determine whether a new feature improves user engagement, helping them make data-driven decisions.

4. Feature Selection & Dimensionality Reduction

Machine learning models perform better when they use the most relevant features. Feature selection techniques like Chi-square tests, Mutual Information, and Fisher’s Score help in choosing the most impactful features, removing unnecessary variables, and improving model efficiency.

Additionally, dimensionality reduction techniques such as Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA) allow data scientists to reduce the number of input variables without losing critical information. This not only enhances model accuracy but also reduces computation time.

5. Evaluating Model Performance

Building an ML model is just the first step; evaluating its performance is equally important. Statistics provides key evaluation metrics such as:

📊 Accuracy – Measures overall correctness of the model.

📊 Precision & Recall – Important for imbalanced datasets like fraud detection.

📊 F1-Score – Balances precision and recall for better decision-making.

📊 ROC & AUC Curves – Help in assessing classification models.

These statistical measures help determine whether a model is overfitting (memorizing training data) or underfitting (failing to learn from data), ensuring the development of a robust and generalizable ML system.

6. Avoiding Overfitting & Underfitting

Overfitting occurs when a model learns too much from training data and performs poorly on new data. Underfitting happens when a model fails to capture patterns in the data. Both issues lead to poor generalization and inaccurate predictions.

Statistical techniques such as regularization (L1, L2), bias-variance tradeoff, and cross-validation help optimize ML models and prevent these problems. Understanding these concepts allows ML practitioners to develop models that work well across different datasets.

Conclusion

Statistics is not just a theoretical subject—it is a practical and essential tool for machine learning. It helps in understanding data, improving model accuracy, reducing errors, and ensuring that ML systems make reliable predictions.

Without statistics, machine learning would be nothing more than guesswork. Whether you’re a beginner or an advanced data scientist, mastering statistics will make you a better ML practitioner.

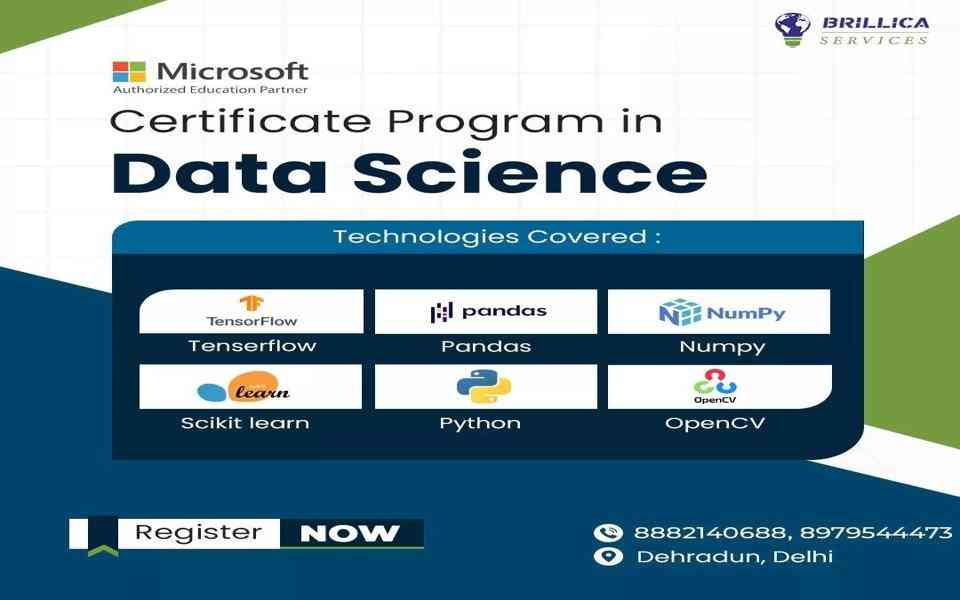

Want to build a strong foundation in Machine Learning and Statistics? Brillica Services offers expert-led Machine Learning courses to help you gain the necessary skills for a successful career in AI and Data Science! 🚀

📢 Start your journey today!

#MachineLearning #Statistics #AI #DataScience #LearnWithBrillica