Synoptix AI delivers enterprise-grade AI performance evaluation through real-time analytics, enabling businesses to monitor, measure, and optimize

Artificial intelligence is no longer an experiment for enterprises. It’s an active part of decision-making, automation, and customer interaction. But as models become more complex, keeping them accurate, safe, and compliant has become a major challenge.

That’s where AI Performance Evaluation comes in. It’s how teams measure the reliability, bias, and effectiveness of models before and after deployment. Many organisations still depend on manual audits to check model outputs. Others have moved toward AI Performance Evaluation Tools that automate and scale the process.

So, which approach actually works better as enterprises expand their use of AI? Let’s break it down.

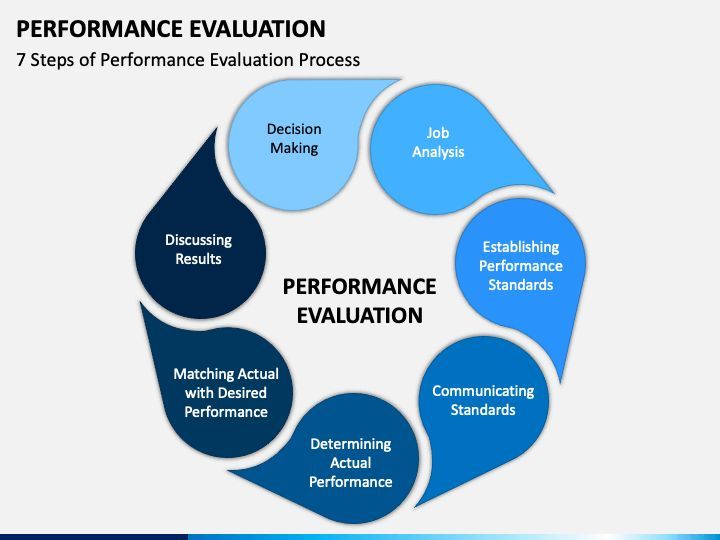

AI Performance Evaluation is a structured way to monitor how well an AI model performs in real-world use. It’s not just about accuracy scores or benchmarks. It covers fairness, explainability, and how models behave under different conditions.

In an enterprise AI platform, this process gives teams clear visibility into what their models are doing — and why. It helps detect drift, bias, or prompt errors early before they impact users or business decisions.

The growing adoption of AI agents to support back-office operations has made evaluation even more critical. Models interact with sensitive data, and even small errors can multiply across systems.

That’s why evaluation isn’t optional anymore. It’s a core part of every responsible AI lifecycle.

Data scientists or compliance teams perform manual model audits. They manually inspect datasets, test model responses, and record performance metrics.

This method has been around since the early days of AI. It allows detailed control and human judgement. Auditors can spot context issues or ethical risks that automated tools might miss.

However, manual audits take time. As the number of models grows — from chatbots to RAG-based enterprise search systems — it becomes difficult to keep up. Each model version needs separate testing, and human reviewers can only cover so much data.

That’s fine for one model. But what about hundreds running across departments?

AI Performance Evaluation Tools automate the review process. They track accuracy, latency, and response quality in real time. They also benchmark models against predefined criteria like fairness, toxicity, and bias.

Unlike manual audits, these tools can run continuously. That means problems are spotted as they happen — not weeks later during scheduled reviews.

Many platforms, including Synoptix AI, now integrate evaluation dashboards that combine monitoring, reporting, and AI security checks in one view. They provide automated summaries, highlight anomalies, and help teams make data-backed improvements fast.

The result is less guesswork and more consistency.

Scalability is where manual audits hit their limit. Human auditors can only test a sample of outputs, while automated tools can evaluate every response.

AI Performance Evaluation Tools can process millions of interactions without slowing down. They also handle new model versions instantly, ensuring no lag between updates and oversight.

In large enterprises, where hundreds of AI agents may run simultaneously, this scale is essential. It’s what enables real-time automation for finance teams, customer support bots, and policy assistants to operate with confidence.

Manual audits can’t offer that pace.

When it comes to accuracy, human auditors bring depth, but machines bring consistency. Manual reviews can be subjective depending on who’s testing. Automated tools apply the same metrics every time, creating a reliable baseline for comparison.

That doesn’t mean humans are out of the picture. The best practice is to use both. Tools handle large-scale evaluations, while humans review edge cases, ethical nuances, or prompt injection risks.

This combined approach ensures both precision and oversight without overloading teams.

Governance is another major reason organisations are shifting to AI Performance Evaluation Tools. Most compliance frameworks, including Australia’s AI technical standards, recommend continuous monitoring and documentation.

Tools automatically log every test and outcome, which supports audits, transparency, and traceability. They also align easily with internal governance systems, whether ISO 27001 or IRAP-style assessments.

In contrast, manual audits often rely on spreadsheets or static reports. These are hard to update, verify, or link to real-time model behaviour.

Automated evaluation keeps everything in sync — policy, data, and performance.

Manual audits require significant human time and specialised expertise. As AI models multiply, so do audit hours. That leads to bottlenecks and high operational costs.

AI Performance Evaluation Tools, on the other hand, reduce repetitive work. They automate repetitive tasks such as response sampling, scoring, and documentation.

This allows teams to focus on higher-value analysis rather than data entry. Over time, this shift saves both cost and time while improving output quality.

In most enterprises, models don’t operate alone. They connect with CRMs, chat systems, and knowledge graphs.

AI Performance Evaluation Tools integrate with these systems to capture live data. For example, when combined with a synoptix search assistant or other enterprise search platforms, evaluation tools can instantly detect inaccurate or irrelevant responses.

This cross-system visibility helps maintain overall AI quality. It also supports Flexible AI model selection, letting teams test multiple models side by side before deployment.

Manual audits struggle with this level of integration. They usually review models in isolation rather than in live business contexts.

Transparency and Explainability

Automated evaluation supports transparency by generating dashboards and visual reports. These show how models make decisions, what influences outputs, and where risks appear.

For regulated sectors like government, finance, and healthcare, this visibility builds trust. It helps decision-makers understand model logic without diving into technical code.

Manual audits can still play a role in qualitative explanations. But for real-time reporting and accountability, automated tools deliver faster insights.

Automation isn’t perfect. AI Performance Evaluation Tools rely on predefined metrics and may miss subtle contextual issues. They can also inherit bias if the evaluation data isn’t diverse enough.

That’s why governance still needs human validation. Regular reviews, ethical checks, and human-in-the-loop testing keep automation accountable.

Another concern is model dependency. When organisations depend entirely on one evaluation system, they risk overlooking its blind spots. Diversifying tools and approaches reduces that risk.

The future isn’t one or the other. It’s both.

The ideal setup is a layered model: tools handle the bulk of evaluation, while experts handle critical judgement. Humans define ethical boundaries, and tools enforce them at scale.

This balance maintains control without slowing innovation. It’s the same approach used by advanced enterprise agent platforms that rely on both automation and expert governance.

At Synoptix AI, performance evaluation is built directly into the platform. The AI Performance Evaluation dashboard measures precision, latency, and reliability across every agent and workflow.

It also monitors AI content safety, fine-tuning and optimisation services, and prompt selection to ensure model behaviour stays aligned with enterprise and regulatory standards.

By combining automated testing with transparent reporting, Synoptix makes evaluation continuous — not a one-off task. This helps organisations maintain trust, scalability, and operational clarity as their AI systems grow.

To see how evaluation works in practice, you can Book a demo and explore the platform in action.

As enterprises adopt more AI applications, performance evaluation will move closer to real-time. AI Performance Evaluation Tools will not just test; they’ll self-correct models on the fly.

We’ll see deeper integration with enterprise data search, LLM fine-tuning, and business integration workflows. AI systems will become more transparent, auditable, and self-improving — all under human supervision.

Manual audits will still have value for validation and ethical oversight, but scalability will depend on automation. The combination of both will define responsible AI adoption in the years ahead.

Manual audits served their purpose when AI systems were small and isolated. But modern enterprises need speed, consistency, and scale.

AI Performance Evaluation Tools meet that need by automating checks, surfacing insights, and maintaining governance in real time. Combined with expert human oversight, they form a balanced, scalable framework that keeps AI systems accountable, secure, and reliable.

If you’re ready to improve how your organisation measures and manages AI quality, explore Synoptix AI’s AI Performance Evaluation features and Book a demo to see it in action.